Data Centric AI

By Ananya Avasthi & Rohan Khanna

A new phenomenon termed Data-Centric AI has gotten a lot of attention in the AI world in the last 6 to 9 months. As we all know, data and algorithms are essential for constructing an AI system. Dealing with data accounts for 80% of the data scientists’ time on a machine learning project despite this, a survey of current research efforts found that 99% of efforts are focused only on improving the model.

With only 1% of research focused on data, there is a pressing need to bring the AI community's attention to this massive gap. Instead of being a costly afterthought, data should be treated as a valuable and adaptable asset in the Data-Centric Architecture. Security, integration, portability, and analysis are all made much easier with data-centricity, and quicker insights are delivered throughout the whole data value chain. Most industries are adopting AI solutions and while AI models have improved over time, a fundamental shift is required to fully realize AI's promise.

“Instead of focusing on the code, companies should focus on developing systematic engineering practices for improving data in ways that are reliable, efficient, and systematic. In other words, companies need to move from a model-centric approach to a data-centric approach.”

— Andrew Ng, CEO and Founder of LandingAI

Why Does Data-Centric AI Matter?

Companies from a variety of industries (including automotive, electronics, and medical device manufacturing) have seen improvements in deploying AI and deep learning-based solutions by adopting a data-centric AI approach. Data Scientists have seen that it's easier to get an algorithm to do what you want it to do if you feed it high-quality data rather than mixed-quality data. The following are some of the benefits we've experienced as a result of adopting a data-centric approach, compared to the traditional model-centric approach as mentioned here:

- Build computer vision applications 10x faster

- reduced time to deploy applications by 65%

- improved yield and accuracy by up to 40%

- By cleaning up the noise in the data, one can get the same result as doubling the number of examples with the same accuracy.

Model-Centric Approach vs Data-centric Approach

A model-centric approach focuses on improving the algorithm, code, and model of the AI System. Andrew Ng, widely regarded as the foremost pioneer of modern artificial intelligence, has a very clear opinion on the matter: he believes AI ecosystems need to move from a model-centric to a data-centric approach.

While working on the model has shown phenomenal progress in AI, we are slowly reaching a point where, for many practical applications, the code (or model) is a solved problem and can be simply repurposed from open-source sources. Working on further optimizing these models will not show worthwhile improvements in performance, instead, systematically working on engineering the data can help enhance performance notably. So, rather than asking “How can you change the model to improve performance?” you should ask “How can you systematically change your data (inputs or labels) to improve performance?”

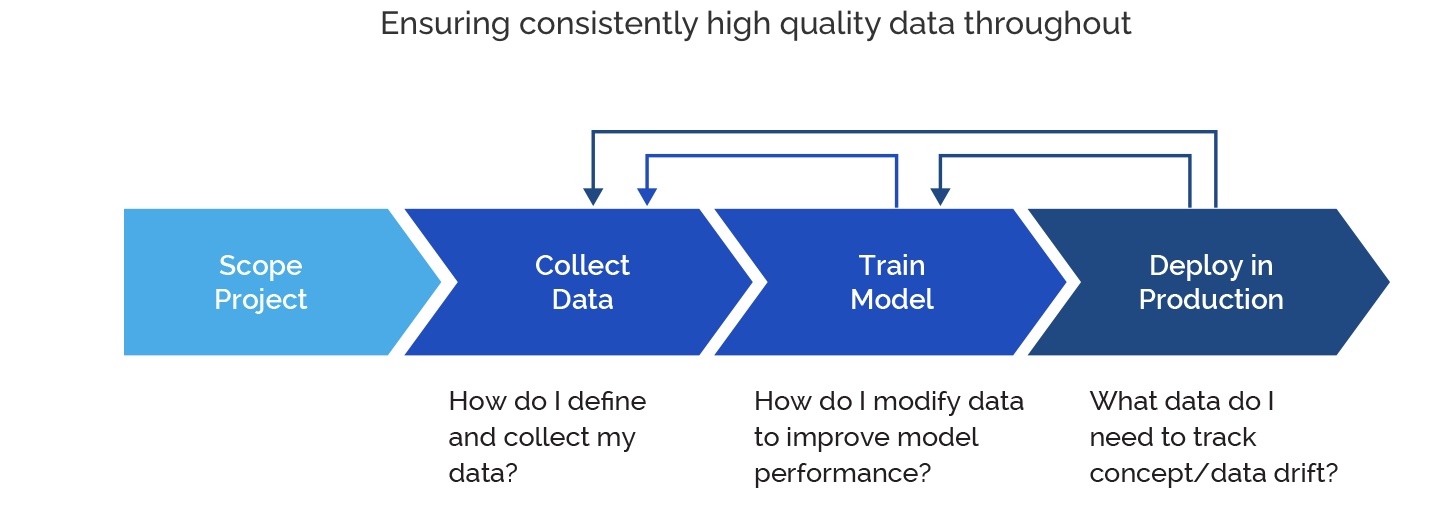

Due to popular belief model-centric approach focuses on continuously improving the algorithm, similarly, data should also be iteratively engineered and improved through the AI systems lifecycle. AI practitioners must train the model on the data, perform error analysis to decide on what segment of data needs to be further engineered, and improve that data by working on both the labels and the input data itself. This process needs to be repeated throughout the AI system’s lifecycle. As mentioned above, if one produces clean high-quality data, AI is capable of learning faster and gaining higher accuracy. The key is consistent labeling.

Steel sheets defect detection Examples

In his seminar at TransformX 2021, Andrew Ng showcases the differences between model-centric and data-centric approaches:

A steel plant had developed a computer-vision solution to inspect steel sheets for defects. Their solution had a baseline accuracy of 76.2%. Their goal was to increase the accuracy to over 90%. Different approaches were used to improve accuracy, model-centric and data-centric. The team working on improving the model achieved 0% improvement after months of trying and the team that engineered the data was able to improve the model by 16.9% to achieve 93.1% accuracy in just a few days.

Ensuring a correct labeling approach for a data-centric model

A machine learning model can only be effective if the training data is of high quality. The consistency and accuracy of labeled data are the determining factors of quality. Several industry standards exist to confirm the quality of data, such as benchmarks, consensus, and review.

Consistent Labeling

When labels are applied to datasets, the labels must be consistent across labelers and batches. This means that, ideally, the instructions should define a deterministic function mapping the inputs (x) to the labels (y). Having consistent labels, that follow a deterministic function mapping, not only increases the accuracy of the model but also exponentially increases the rate at which the error during training decreases.

Labeler Consensus and defining standards

Use multiple labelers to find inconsistencies. Define a standard for your labelers to reduce inconsistencies. Repeatedly, find examples where the label is inconsistent between multiple labelers. Define a standard for how each point of inconsistency should be labeled and document this decision in the labeling instructions.

Accuracy and Review

An annotation's accuracy is determined by how closely it matches the Data Ground Truth. A subset of the training data is labeled by a knowledge expert or data scientist to measure the annotation's precision. In order to assess accuracy, benchmark datasets are used. Benchmark datasets give data scientists insight into the labeler's accuracy, letting them assess the quality of their data over time.

As a second way of ensuring that the data is accurate, an expert assesses the annotations after the labels have been completed. Typically, the review is done by visually spot-checking labels; however, some projects can review all labels. Reviews are used to identify inconsistencies and inaccuracies in labeling while benchmarks are often used to judge labeler quality.

Impact of noisy data

Data that is noisy can have a variety of impacts depending on the quantity and use case. For example, if the dataset size is small, the number of noisy labels will be more, but on larger datasets, the label noise will be averaged. Further, noisy data can influence model training in critical use cases like medical image analysis.

Using Biz-Tech Analytics For a Data-centric Approach

At Biz-Tech Analytics we believe a data-centric approach to data annotation and labeling makes your AI system easier to train and more capable of producing accurate results. We take steps to ensure high-quality labels by:

- We make our labels consistent by defining rules and deterministic functions clearly in the instruction set, using your data scientists’ and domain experts’ insights. We are also constantly iterating on our instruction set and adding examples to further reduce inconsistencies.

- We focus on consensus labeling to spot inconsistencies. Each instance of data is labeled by at least two labelers, and disagreements are handled by a third reviewer while providing feedback to annotators. Additionally, the edge cases that are causing ambiguity and inconsistency are added to the instruction set.

- Our labelers rely on their intuition to flag noisy examples which are sent for review.

- At the time of submission of a set of labeled data, a project manager randomly selects a certain percentage of the labeled data for spot-checking and makes any final changes to this data. Depending on the precision and recall rate the whole cycle may start again.

Please get in touch to learn more about how Biz-Tech Analytics can bring a data-centric workflow to your AI project.