Table of Contents

Benchmark Data Quality: Why Human-Curated Test Sets Outperform Automated Alternatives

In the competitive landscape of artificial intelligence development, accurate model evaluation can make or break your AI strategy. While automated benchmark generation tools promise quick deployment and cost savings, human-curated benchmark data consistently deliver superior insights into real-world AI performance. This comprehensive analysis explores why data annotation services specializing in evaluation dataset creation provide more reliable performance metrics than automated alternatives, and how businesses can leverage professional AI data annotation to build trustworthy AI evaluation frameworks.

Understanding Benchmark Data: The Foundation of AI Model Evaluation

Benchmark datasets serve as the gold standard for measuring AI model performance, making its accuracy absolutely critical. Unlike training data, where some noise can be tolerated, these golden datasets must be meticulously crafted to provide reliable performance metrics. This is where the distinction between human-curated and automated approaches becomes most apparent.

When evaluation data is compromised by automated generation shortcuts, businesses lose the ability to accurately measure model performance, leading to costly deployment failures and reduced user trust.

The Critical Role of Human Expertise in Benchmark Creation

1. Real-World Relevance and Contextual Accuracy:

Human data annotation specialists bring irreplaceable contextual understanding to benchmark creation. Unlike automated systems that generate test cases based on statistical patterns, human experts design evaluation scenarios that reflect actual use cases and edge conditions encountered in production environments.

Human evaluation creates benchmarks that track not just technical performance but how well AI resonates with users, providing comprehensive insights into practical AI effectiveness. This human-centered approach ensures that the benchmark data accurately represents the challenges AI systems will face in real-world applications.

2. Domain-Specific Evaluation Design:

Professional data annotation services employ domain experts who understand the nuances of specific industries and applications. When creating benchmark data for medical AI systems, financial analysis tools, or autonomous vehicles, human specialists design test cases that reflect actual professional workflows and decision-making scenarios.

Professional data annotation services leverage domain experts to significantly boost AI system performance. Independent analyses, such as those by Perle AI show that expert annotation can lead to up to 28 % improvement in model accuracy, 30–40 % faster iteration cycles, and an 85 % reduction in real‑world errors, compared to non‑expert labeling.

3. Edge Case Identification and Rare Scenario Coverage:

One of the most significant advantages of human-curated evaluation data lies in comprehensive edge case coverage. Data annotation specialists trained in evaluation design specifically craft test scenarios that probe model limitations and failure modes that automated systems frequently overlook.

The Limitations of Automated Benchmark Generation

1. Pattern Recognition vs. Real-World Complexity:

Automated benchmark generation tools operate by identifying patterns in existing data and creating similar test cases. However, this approach fundamentally misses the unpredictable nature of real-world AI applications. Models may have been exposed to similar questions or benchmark patterns during training, which could artificially inflate their performance.

2. Bias Amplification in Evaluation Metrics:

Automated systems often replicate and amplify existing biases present in their source data. They create misleading performance metrics that don’t reflect true model capabilities.

3. Limited Adaptability to Emerging Requirements:

As AI applications evolve rapidly, evaluation requirements change. Human data annotation specialists can quickly adapt benchmark designs to assess new capabilities, incorporate emerging use cases, and respond to changing performance standards. Automated systems require significant reconfiguration and often complete rebuilding to accommodate new evaluation criteria.

Professional Benchmark Creation Methodologies

Standardized Evaluation Framework Design

Expert data annotation services follow rigorous methodologies for creating reliable benchmark data:

- Multi-Dimensional Assessment: Designing test cases that evaluate multiple aspects of AI performance, including accuracy, robustness, fairness, and efficiency.

- Scenario-Based Testing: Creating realistic scenarios that mirror actual use cases rather than abstract statistical challenges.

- Progressive Difficulty Scaling: Implementing graduated difficulty levels that reveal performance degradation patterns and capability boundaries.

- Cross-Domain Validation: Ensuring benchmark relevance across different applications and user contexts.

Quality Assurance in Benchmark Development

Professional benchmark creation involves multiple validation layers:

- Expert Review Panels: Domain specialists validate test case relevance and accuracy

- Pilot Testing: Preliminary evaluation with known-performance models to calibrate difficulty

- Statistical Validation: Mathematical verification of benchmark statistical properties

- Real-World Correlation: Validation against actual deployment performance data

Human-AI Collaboration in Evaluation Design

The most effective approach combines human expertise with technological assistance. Comprehensive evaluation suites assess language model capabilities across reasoning, knowledge, mathematics and programming, requiring both human insight and computational processing to create effective benchmarks.

Established AI Benchmark Creation Methodologies

Recent academic research has identified key methodologies for creating reliable AI benchmarks. Stanford’s BetterBench research developed a comprehensive assessment framework considering 46 best practices across an AI benchmark’s lifecycle, providing a scientific foundation for benchmark creation.

Research-Backed Benchmark Design Principles

Comprehensive Lifecycle Assessment: Academic research has established that quality AI benchmarks require evaluation across 46 distinct criteria derived from expert interviews and domain literature. This framework addresses benchmark design from conception through implementation and maintenance.

Reproducibility and Documentation Standards: Current research shows that 17 out of 24 major benchmarks lack sufficient documentation for reproducibility, highlighting the critical need for proper materials and code documentation in the benchmarking process. Professional benchmark creation must prioritize reproducible methodologies.

Task Difficulty Assessment: Microsoft Research has developed frameworks using ADeLe (annotated-demand-levels) technique that assesses task difficulty for AI models by applying measurement scales for 18 types of cognitive and knowledge-based abilities.

Quality Assurance in Professional Benchmark Development

Peer Review and Validation: Research by McIntosh et al. (2024) found that many influential benchmarks have been released without rigorous academic peer-review, emphasizing the importance of proper quality control in benchmark development.

Standardized Assessment Criteria: Industry best practices include providing clear code documentation, establishing points of contact, and implementing reproducibility standards that can significantly enhance benchmark usability and accountability.

Industry-Standard AI Benchmark Quality Metrics

High-quality benchmark datasets are characterized by several foundational qualities and evaluation metrics that together ensure they are both rigorous and actionable.

Representativeness & Diversity

- Domain coverage: Datasets must reflect the full scope of real-world scenarios they’re meant to model, whether it’s everyday objects (e.g., COCO dataset), video-based question answering (e.g., VQA Human Correction Project), to specialized programming tasks (e.g., code completion, debugging, or logic generation datasets handled in our annotation pipelines).

- Data volume: A large, diverse dataset prevents memorization, supports statistical rigor, and captures real-world variability, whether it’s in object poses, question types, or code structures.

Clear Annotations & Task-Specific Metrics

- Precise labeling: Uniform, well-defined annotation protocols across different data types (bounding boxes in CV, masks, correct answers in VQA, line-level annotations in code datasets) ensure evaluation consistency.

- Standard metrics: Benchmarks typically include built-in scoring systems:

- CV: mAP, IoU for detection; Dice or Jaccard for segmentation.

- VQA: Answer accuracy and timestamp alignment, with classification logic around question relevance and taxonomy association.

- Coding: Functional correctness, pass rates, syntax validity, and logical coherence of the output code.

- BLEU/ROUGE for text generation tasks

Reproducibility & Accessibility

- Public availability: Datasets like COCO, VQA, and code repositories (like CodeX or HumanEval-style benchmarks) are hosted publicly, often with standardized leaderboards or test submission platforms.

- Transparent documentation: Includes detailed guidelines, annotation schemas, and clear dataset splits (train/val/test) for replicable results.

Benchmark Consistency & Progress Tracking

- Leaderboard-friendly: Many benchmarks (CV and VQA alike) integrate with challenge platforms to track performance evolution over time.

- Standard baselines: Reference implementations (YOLOv8 on COCO, LLaVA on VQA datasets, or GPT-based models on code datasets) anchor performance expectations.

Robustness & Edge Testing

- Edge cases: High-quality datasets include rare or challenging examples, ambiguous questions in VQA, long-tail objects in CV, or nested logic structures in code tasks-to test model limits and surface failure modes.

Established Performance Measurement Standards

Standard Evaluation Metrics: Industry practice employs established metrics including accuracy, precision, recall, and F1 scores for classification tasks, along with task-specific measures for different AI applications.

Progress Tracking: Industry-standard practice includes tracking AI progress over time and correlating benchmark scores with key factors like compute requirements and model accessibility

Quality Assessment Framework

Systematic Evaluation Criteria: Professional benchmark assessment utilizes comprehensive frameworks for evaluating benchmark reliability and validity.

Performance Measurement: Industry standards include analysis across key performance areas including accuracy assessment, efficiency evaluation, and practical applicability testing.

Validation and Reliability Standards

Reproducibility Requirements: Professional benchmark development requires clear documentation and materials for result reproduction, as implementation consistency is crucial for reliable assessment.

Documentation Standards: Quality benchmarks must provide comprehensive documentation, clear evaluation protocols, and standardized assessment procedures.

Industry Applications: Where Human-Curated Benchmarks Excel

Healthcare AI Evaluation

Medical AI systems require benchmark data that reflect clinical reality and patient safety requirements. Human medical experts design evaluation scenarios that test not just diagnostic accuracy but also clinical judgment, safety protocols, and edge cases that could impact patient outcomes.

AI benchmarks of direct clinical relevance are scarce and fail to cover most work activities that clinicians want to see addressed, highlighting the critical need for human-expert-designed evaluation frameworks in healthcare applications.

Software Engineering and Code Quality Assessment

AI systems used in software development and code generation require benchmarks that evaluate not just syntactic correctness but also code quality, security vulnerabilities, maintainability, and adherence to best practices. Human software engineers and security experts design evaluation frameworks that test code review capabilities, vulnerability detection, architectural decision-making, and compliance with coding standards across different programming languages and development contexts.

Autonomous Systems and Safety-Critical Applications

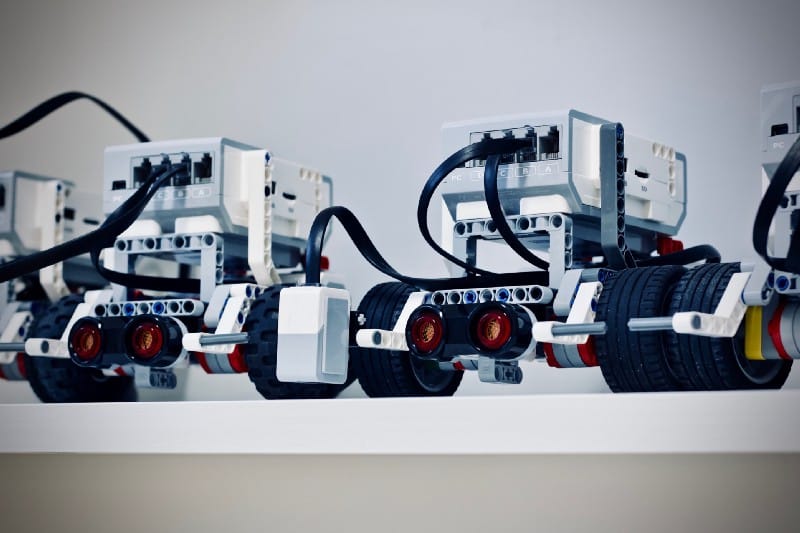

For autonomous vehicles, robotics, and other safety-critical AI systems, benchmark data must comprehensively test safety protocols and failure recovery mechanisms. Human experts design scenarios that evaluate not just normal operation but also emergency responses and failure mode handling.

Building Effective AI Evaluation Strategies

Selecting Professional Evaluation Services

When choosing data annotation services for benchmark creation, consider these critical factors:

- Domain Expertise: Deep understanding of your specific industry and application requirements

- Methodology Rigor: Proven frameworks based on established research for creating statistically valid and practically relevant benchmarks

- Quality Assurance: Comprehensive validation processes ensuring benchmark reliability following academic standards

- Scalability: Ability to create benchmarks that grow with your AI development needs

Internal Benchmark Development Capabilities

Organizations serious about AI evaluation should consider developing internal capabilities:

- Expert Recruitment: Hiring data annotation specialists with evaluation design expertise based on established methodologies

- Methodology Training: Developing comprehensive benchmark creation and validation skills following industry best practices

- Tool Integration: Implementing professional-grade evaluation platforms and analysis tools

- Continuous Improvement: Establishing processes for benchmark updates and refinements

The Future of AI Evaluation: Emerging Trends

Dynamic Benchmark Adaptation

Next-generation benchmark data will adapt to rapidly changing AI capabilities:

- Adaptive Difficulty: Benchmarks that automatically adjust difficulty based on model capabilities

- Real-Time Updates: Evaluation frameworks that incorporate new challenges as they emerge

- Personalized Assessment: Benchmarks tailored to specific deployment contexts and requirements

Human-AI Collaborative Evaluation

Future evaluation systems will optimize the combination of human expertise and AI assistance:

- AI-Assisted Design: Using AI tools to help human experts generate comprehensive test scenarios

- Automated Quality Checking: AI systems that validate human-designed benchmarks for completeness

- Hybrid Validation: Combining automated statistical analysis with human expert judgment

Conclusion: The Strategic Imperative of Professional Benchmark Creation

In today’s AI-driven marketplace, the quality of your golden dataset directly determines your ability to deploy reliable, trustworthy AI systems. While automated benchmark generation offers apparent cost and speed advantages, it cannot match the depth, relevance, and reliability that human-expert-curated benchmark data provide.

Organizations that invest in professional AI data annotation services for golden dataset creation consistently make better AI deployment decisions, reduce production failures, and build more trustworthy AI systems. The evidence is clear: when it comes to evaluating AI system readiness for real-world deployment, human expertise in dataset creation remains irreplaceable.

The key to AI success lies in recognizing that evaluation quality is not just a technical requirement – it’s a strategic imperative that determines whether your AI investments deliver genuine business value. By partnering with experienced data annotation services and investing in skilled data annotation specialists, you’re not just improving your evaluation processes; you’re building the foundation for sustainable AI competitive advantage.

Transform Your AI Evaluation with Expert Benchmark Creation Services

At Biz-Tech Analytics, we believe in the power of data and AI to transform businesses. With our extensive experience across multiple industries and our expertise in data services, we help businesses leverage advanced technologies to solve real-world challenges and drive growth.

Our team of certified data annotation specialists combines deep domain expertise with rigorous evaluation methodologies to create the high-quality benchmark data your AI projects deserve. Whether you need comprehensive evaluation framework design, specialized AI data annotation for testing, or expert model evaluation services, we’re your trusted partner in AI innovation.

Don’t let unreliable evaluation limit your AI potential.

Contact Biz-Tech Analytics today to discover how our expert benchmark creation services can provide the accurate performance insights you need to make confident AI deployment decisions in today’s competitive marketplace.

Get in touch with our AI evaluation experts now – your successful AI deployment starts with reliable benchmark data.