Table of Contents

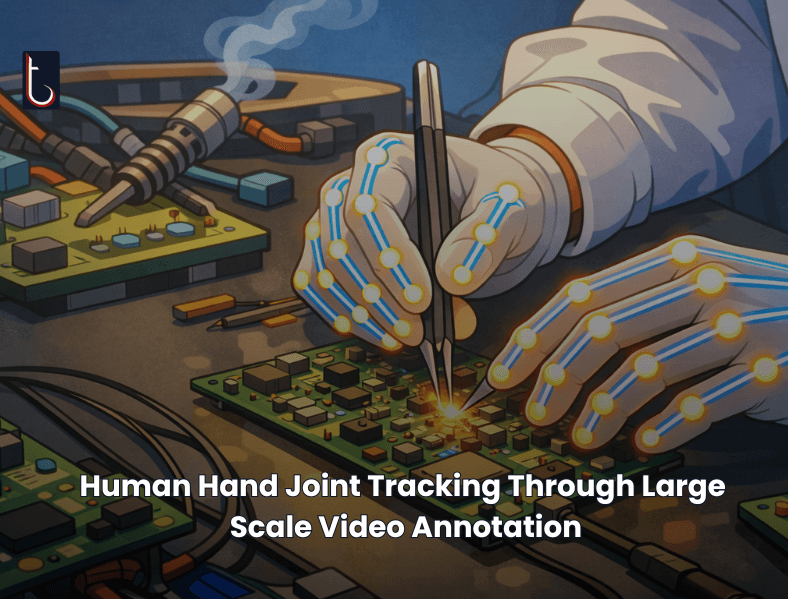

Human Hand Joint Tracking Through Large Scale Video Annotation

Introduction

A large scale skeleton annotation project was executed to support advanced robotics and computer vision research focused on fine motor skill replication. The objective was to accurately capture human hand movements involved in electronic component assembly and convert them into high quality training data for AI models.

The dataset consisted of approximately 4,500 assembly videos featuring a wide range of electronic components and small precision parts. Each video required detailed hand movement analysis to enable realistic digital reconstruction of human assembly actions.

This project highlights our expertise in robotics annotation services with fast turnaround times and complex video annotation workflows.

The Challenge

Training models on delicate electronic assembly tasks requires extremely precise motion data. The client needed a dataset that could capture subtle finger movements, hand orientation, and coordination between both hands under real world conditions.

Key challenges included:

- Fine grained joint tracking

Each hand required annotation of 21 individual joints, demanding high spatial accuracy across thousands of frames to support downstream computer vision data labeling for AI and robotics models. - Occlusion and visual noise

Hands were frequently partially hidden behind components or tools. Blurred frames and motion artifacts made finger identification difficult in several sequences. - Gloves and finger ambiguity

Many assembly tasks were performed while wearing gloves, reducing finger visibility. In addition, when both hands overlapped, distinguishing which finger belonged to which hand required careful frame by frame reasoning. - Component diversity

The videos covered assembly of multiple electronic component types, each introducing different grip styles, hand poses, and interaction patterns that needed consistent labeling.

The Solution

We designed a robust annotation framework tailored specifically for robotics focused video annotation and AI training data solutions for robotics.

Our approach included:

- Structured skeleton annotation workflow

Each video was annotated using a standardized joint mapping system, ensuring consistent labeling of 21 key points per hand across the dataset. - Context aware frame analysis

Annotators were trained to infer finger positioning during partial occlusions using motion continuity and interaction context from surrounding frames. - Quality driven review loops

Multiple validation passes were implemented to maintain accuracy despite visual challenges such as gloves, blur, and overlapping hands. - Scalable execution

The project was executed at scale with over 7,000 annotation tasks completed within tight timelines, demonstrating our strength in fast turnaround robotics annotation services.

The Result

The final dataset delivered highly accurate, structured skeleton annotations suitable for advanced robotics and computer vision applications.

Main outcomes included:

Over 7,000 completed annotation tasks with 95% accuracy

Precise hand and finger movement data across 4,500 assembly videos

Coverage of diverse electronic component assembly scenarios

High quality video annotation data ready for training robot hands and manipulators

This dataset can be directly used to train robot hands for electronic component assembly, enabling robots to learn human like grasping, positioning, and motion sequencing for fine motor tasks. The project demonstrates our ability to deliver reliable computer vision data labeling for robotics and complex AI training data solutions at scale.

If this was interesting, contact us to know more!